kserve

KServe

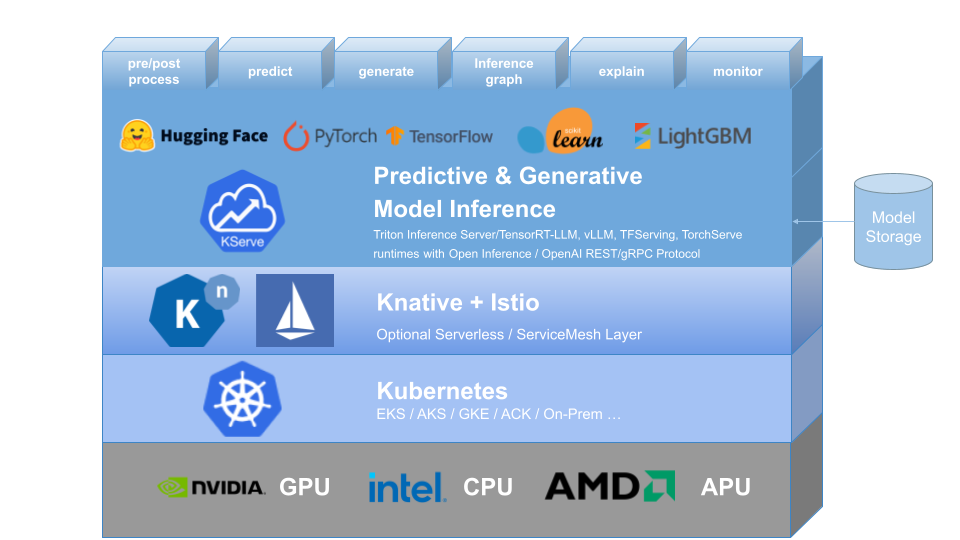

KServe is a standardized distributed generative and predictive AI inference platform for scalable, multi-framework deployment on Kubernetes.

KServe is being used by many organizations and is a Cloud Native Computing Foundation (CNCF) incubating project.

For more details, visit the KServe website.

Why KServe?

Single platform that unifies Generative and Predictive AI inference on Kubernetes. Simple enough for quick deployments, yet powerful enough to handle enterprise-scale AI workloads with advanced features.

Features

Generative AI

- 🧠 LLM-Optimized: OpenAI-compatible inference protocol for seamless integration with large language models

- 🚅 GPU Acceleration: High-performance serving with GPU support and optimized memory management for large models

- 💾 Model Caching: Intelligent model caching to reduce loading times and improve response latency for frequently used models

- 🗂️ KV Cache Offloading: Advanced memory management with KV cache offloading to CPU/disk for handling longer sequences efficiently

- 📈 Autoscaling: Request-based autoscaling capabilities optimized for generative workload patterns

- 🔧 Hugging Face Ready: Native support for Hugging Face models with streamlined deployment workflows

Predictive AI

- 🧮 Multi-Framework: Support for TensorFlow, PyTorch, scikit-learn, XGBoost, ONNX, and more

- 🔀 Intelligent Routing: Seamless request routing between predictor, transformer, and explainer components with automatic traffic management

- 🔄 Advanced Deployments: Canary rollouts, inference pipelines, and ensembles with InferenceGraph

- ⚡ Autoscaling: Request-based autoscaling with scale-to-zero for predictive workloads

- 🔍 Model Explainability: Built-in support for model explanations and feature attribution to understand prediction reasoning

- 📊 Advanced Monitoring: Enables payload logging, outlier detection, adversarial detection, and drift detection

- 💰 Cost Efficient: Scale-to-zero on expensive resources when not in use, reducing infrastructure costs

Learn More

To learn more about KServe, how to use various supported features, and how to participate in the KServe community, please follow the KServe website documentation. Additionally, we have compiled a list of presentations and demos to dive through various details.

:hammer_and_wrench: Installation

Standalone Installation

- Standard Kubernetes Installation: Compared to Serverless Installation, this is a more lightweight installation. However, this option does not support canary deployment and request based autoscaling with scale-to-zero.

- Knative Installation: KServe by default installs Knative for serverless deployment for InferenceService.

- ModelMesh Installation: You can optionally install ModelMesh to enable high-scale, high-density and frequently-changing model serving use cases.

- Quick Installation: Install KServe on your local machine.

Kubeflow Installation

KServe is an important addon component of Kubeflow, please learn more from the Kubeflow KServe documentation. Check out the following guides for running on AWS or on OpenShift Container Platform.

:flight_departure: Create your first InferenceService

:bulb: Roadmap

:blue_book: InferenceService API Reference

:toolbox: Developer Guide

:writing_hand: Contributor Guide

:handshake: Adopters

Star History

Contributors

Thanks to all of our amazing contributors!