Announcing KServe v0.15 - Advancing Generative AI Model Serving

Published on May 27, 2025

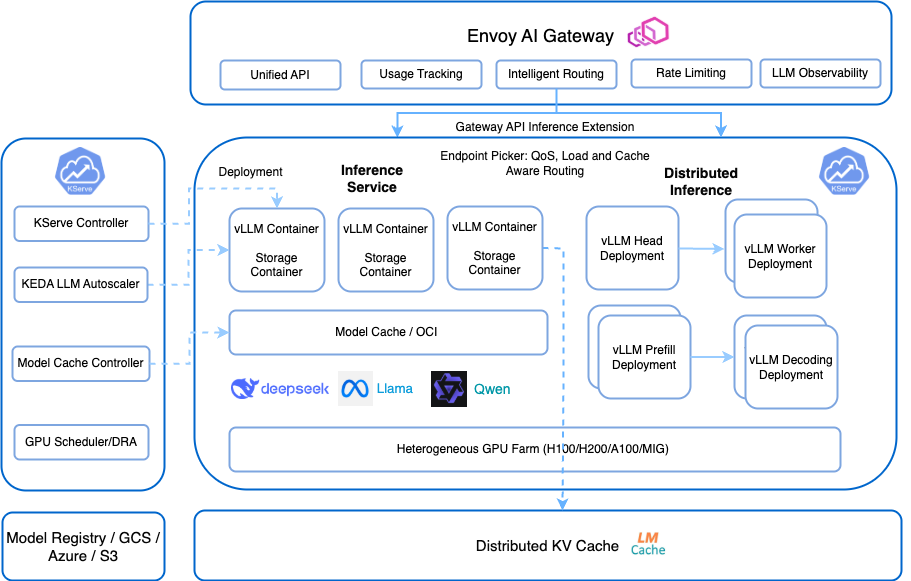

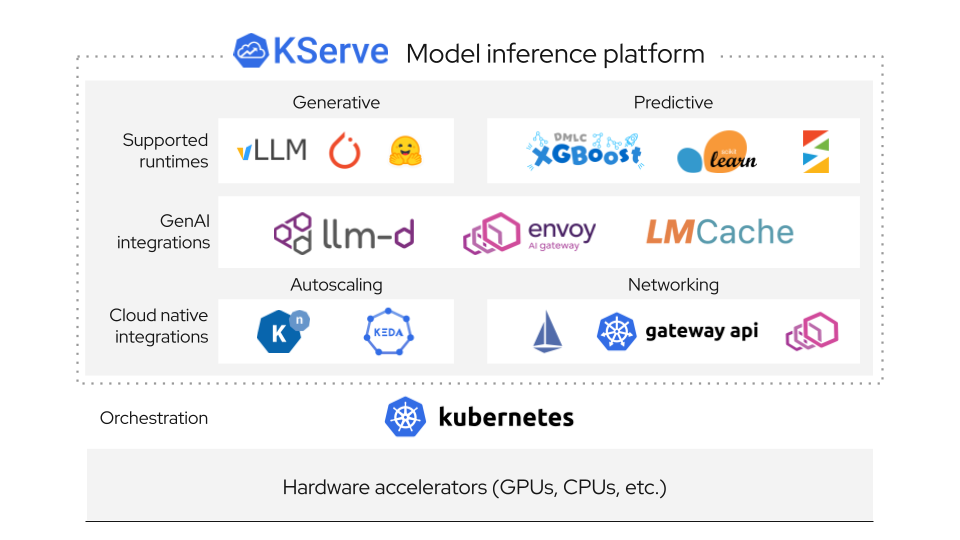

We are thrilled to announce the release of KServe v0.15, marking a significant leap forward in serving both predictive and generative AI models. This release introduces enhanced support for generative AI workloads, including advanced features for serving large language models (LLMs), improved model and KV caching mechanisms, and integration with Envoy AI Gateway.